Why would you use Hive in 2024? What is stopping you from migrating away from it?

How often do you wish there was a better alternative? Let me know in the comments and …

Fear not! Iceberg is here and it is here to stay!

Created in 2020 by Netflix, Apache Iceberg is an open source Table Format and a contender to replace retiring Hive Managed tables and save us all from the hive related pain-points by doing so. The truth is – Apache Iceberg does it’s jobs pretty well!

It is all about flexibility

As Hive began to age, the weak spots started to shine bright whenever *real* big data was in question. Too frequent writes to tables causing issues with the number of small files hidden under each partition, the autocomapction mechanism requiring huge resources to be effective and fast, metadata manipulation being a pain in the … and the downfalling efficiency of concurrent operations on top of that were all nails to the coffin in which Hive was to be burried, waiting for the hammer to hit. But the metaphorical hammer was quite slow to arrive—until Iceberg took the stage.

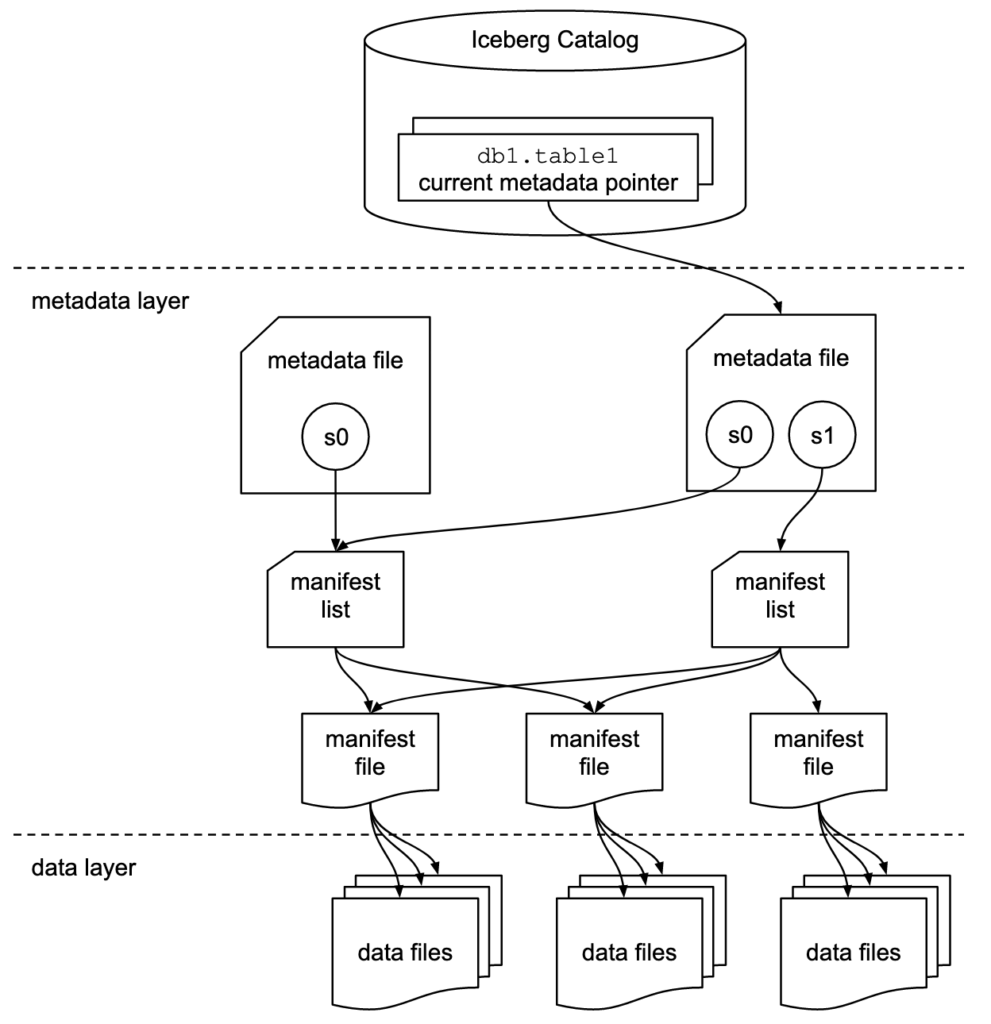

As Netflix had an abundance of *Petabyte-level* projects, they had all the issues served on a silver plate and took matters into their own hands.Apache Iceberg gives its users two main layers of information to play with: data and metadata.

The data layer, as it is quite self-explanatory, contains data stored by default as *parquet* files.The metadata layer, on the other hand, consists of *json* and *avro* files with information describing, no joke, the data files.As simple as it sounds, it brings a lot of flexibility to play and allows for the implementation of the coolest-sounding features, like:

- Time travel and rollbacks

- Branching

- Hidden partitioning

- Full Schema Evolution

Back to the Future!

Time travel leverages the snapshot mechanism to provide a simple and reliable way of moving back in time should any introduced changes (schema evolution, new data, slow changing dimensions) be braking for the rest of the pipeline.

How to use:

First, list the snapshots to select the point in time at which you would like to come back.

SELECT * FROM prod.db.table.refs;

When the snapshot is selected, you can use either its ID or the timestamp, and… it’s time to time travel!

-- time travel to April 16, 2024 at 12:21:12

SELECT * FROM prod.db.table TIMESTAMP AS OF '2024-04-16 12:21:12';

-- time travel to snapshot with id 10963874102873L

SELECT * FROM prod.db.table VERSION AS OF 10963874102873;

Branching for the win!

Whether it is your code or your data, branches can easily save your skin during the lifespan of the project (honestly, if you are not using them already, please reconsider). To help us prevent pipeline failures due to data quality-related issues, Netflix has also introduced the public Write-Audit-Publish framework. Your data first lands on the audit branch, where 3rd party tools reevaluate the data quality before merging it with the main branch and displaying it for public use.

How to use:

Start by creating a branch and loading the data into it.

ALTER TABLE prod.db.table CREATE BRANCH `my-audit-branch` RETAIN 7 DAYS;Then, when you are sure the DQ requirements are met, merge them into the main and enjoy your well-kept, clean, and reliable table.

CALL spark_catalog.system.fast_forward('prod.db.table', 'main', 'my-audit-branch')

Partitioning is obvious, why hide it?

Wait, Iceberg is not going to *hide* the partitions, right? Right 😀 In Hive, if the query had no partition included in the `where` statement, the whole table was scanned in order to find the data – it is particularly painful when talking about looking for a period of data, i.e. 1 hour, when the partition is daily or monthly, but the user did not specify that in the query.

How to use:

In order to leverage the hidden partition mechanism, we need to define the partition on one of the columns. Following the example of extracting 1 hour of data from the table, there is no need to create a separate column `hour` with values filled based on the `timestamp` column. Instead, we can do the following:

CREATE TABLE prod.db.sample (

id bigint,

data string,

ts timestamp,

year int)

USING iceberg

PARTITIONED BY (bucket(8, id), year, hours(ts));No new column was added, yet when the user calls for the hour of data executing the following SQL, Iceberg will only partition for the hour needed.

SELECT * FROM prod.db.sample

WHERE ts BETWEEN '2024-04-16 00:00:00' and '2024-04-16 01:00:00'„Evolution rules!” ~ Darvin (probably)

As the metadata files are separate from the data files, it takes seconds to adapt any changes to your schema. Ah, but will it cause the data to be rewritten? If I add a new column or add the partitioning field, will it cause all the data to be lifted and replaced in a new order? To be honest, it depends. By default, *no*. Iceberg will apply those changes to all new incoming data, and what is already loaded into the table will be left untouched. New columns will be filled with empty values, and the new partitioning field will not be taken into consideration. But! You can ask it to rewrite the data and apply all the changes to the history too! Flexible and agile, suit yourself! Amazing, isn’t it?

What next?

Stay tuned for more articles in regards to Iceberg including a deep dive on time travel, getting really familiar with how hidden partitioning works on different engines, and WAP framework implementation!

Sources

https://iceberg.apache.org/docs/1.5.0/spark-queries/#sql

https://iceberg.apache.org/docs/1.5.0/spark-queries/#references